Hackers Can Shine Lasers at Your Alexa Device and Do Bad, Bad Things to It

Move the Echo away from the window. Now.

- A new paper funded by DARPA and a Japanese organization for the promotion of science and technology find that simple lasers can basically hack into voice-controlled assistants.

- Researchers are able to use lasers to inject malicious commands into smart devices, even remotely starting a victim's car if it's connected through a Google account.

- To be safe, keep your voice devices away from windows in your home.

Keep Alexa away from all windows: Turns out hackers can shine lasers at your Google Assistant or Amazon Alexa-enabled devices and gain control of them, sending commands to the smart assistants or obtaining your valuable account information.

Researchers proved this by using lasers to inject malicious commands into voice-controlled devices like smart speakers, tablets, and phones across long distances through glass windowpanes.

In a new paper, "Light Commands: Laser-Based Audio Injection Attacks on Voice-Controllable Systems," the authors describe the laser-based vulnerability as a "signal injection attack" on microphones based on the photoacoustic effect, which converts light to sound through a microphone. From the abstract:

We show how an attacker can inject arbitrary audio signals to the target microphone by aiming an amplitude-modulated light at the microphone’s aperture. We then proceed to show how this effect leads to a remote voice-command injection attack on voice-controllable systems. Examining various products that use Amazon’s Alexa, Apple’s Siri, Facebook’s Portal, and Google Assistant, we show how to use light to obtain full control over these devices at distances up to 110 meters and from two separate buildings.

User authentication is lacking or nonexistent on voice assistant devices, the researchers also found. That meant they could use the light-injected voice commands to unlock the victim's smart lock-enabled doors, shop on their e-commerce sites, use their payment methods, or even unlock and start vehicles connected to the victim's Google account.

The research—funded by the Japan Society for the Promotion of Science and the U.S. Defense Advanced Research Projects Agency (DARPA), among other organizations—was meant to uncover possible security vulnerabilities in Internet of Things devices.

"While much attention is being given to improving the capabilities of [voice controlled] systems, much less is known about the resilience of these systems to software and hardware attacks," the authors write in the paper. "Indeed, previous works already highlight a major limitation of voice-only user interaction: the lack of proper user authentication."

So how does it work? The mics can convert sound into electrical signals, sure, but they also react to light aimed directly at them. By using a high-intensity light beam, modulating an electrical signal, the researchers could trick the microphones embedded in the voice devices into producing electrical signals as if they were receiving real audio from your voice, for example. That means hackers can then inject inaudible commands through the light beams.

Devices are vulnerable from up to 110 meters away, as of the time of the paper's publication. That's about the length of a football field.

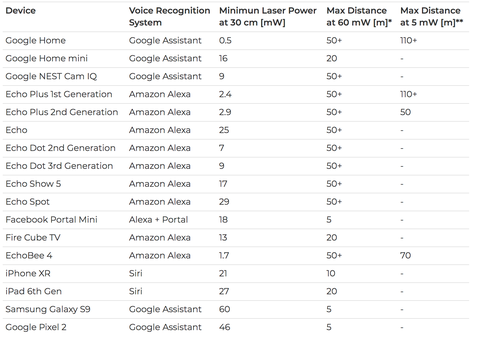

Pretty much any voice-enabled device you can imagine is vulnerable to this kind of attack, but the authors have tested and confirmed vulnerabilities in the following:

Perhaps the most disturbing part is how easy this kind of hack is. All you need, according to the research group, are a simple laser pointer, a laser diode driver (which keeps a constant current of power supply to the laser), a sound amplifier, and a telephoto lens to focus the laser better for long-range attacks.

While this research and the associated hacks were conducted by professional researchers, criminals could do the same (though they found no instances of this happening maliciously ... yet). However, with the methodology out there now, it's a double-edged sword: Yes, some consumers may read about this vulnerability and prepare against it, but now hackers also have new ideas.

It's pretty difficult to tell if you're being attacked this way, but users may notice the light beam's reflection on the device in question or a user can try to monitor the device's verbal response and light pattern changes. If you notice your device acting erratically, more or less, unplug the damn thing and move it away from the window.

The good news is that there are ways to protect yourself from a laser-based attack, according to the paper:

An additional layer of authentication can be effective at somewhat mitigating the attack. Alternatively, in case the attacker cannot eavesdrop on the device's response, having the device ask the user a simple randomized question before command execution can be an effective way at preventing the attacker from obtaining successful command execution.

Manufacturers can also attempt to use sensor fusion techniques, such as acquire audio from multiple microphones. When the attacker uses a single laser, only a single microphone receives a signal while the others receive nothing. Thus, manufacturers can attempt to detect such anomalies, ignoring the injected commands.

Another approach consists in reducing the amount of light reaching the microphone's diaphragm using a barrier that physically blocks straight light beams for eliminating the line of sight to the diaphragm, or implement a non-transparent cover on top of the microphone hole for attenuating the amount of light hitting the microphone. However, we note that such physical barriers are only effective to a certain point, as an attacker can always increase the laser power in an attempt to compensate for the cover-induced attenuation or for burning through the barriers, creating a new light path.

Comments

Post a Comment